A lot of virtual private server providers do not have a backup option and those who do often charge extra to include it in your monthly plan. Well, there is an easy way to avoid it by using Google Drive which already provides us 15 GB free storage which is enough to store your up and coming websites.

We will be using Python and Google Drive API to achieve this after which we will create a cron job to automate this for us at regular intervals.

Getting Google Drive API

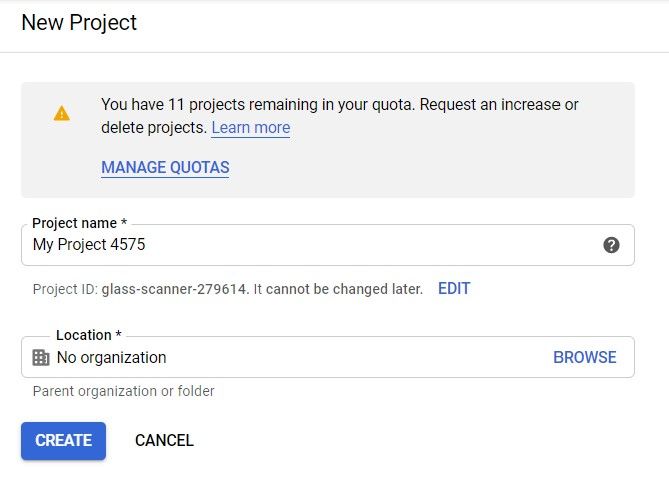

To obtain the token we need for automatic permanent authentication, we will use a Google Console Project. Proceed to Google Cloud and create a "New Project".

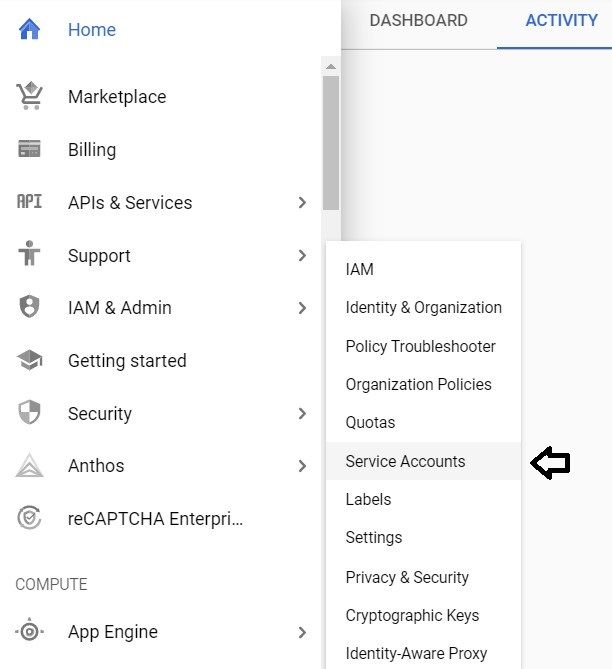

Click on "IAM & Admin" and click "Service Accounts".

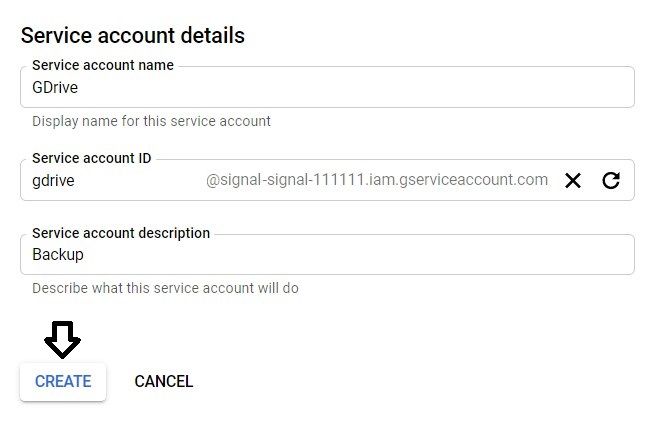

Then click on Create Service Account. Give it any name and an id of your choice, and click on "Create".

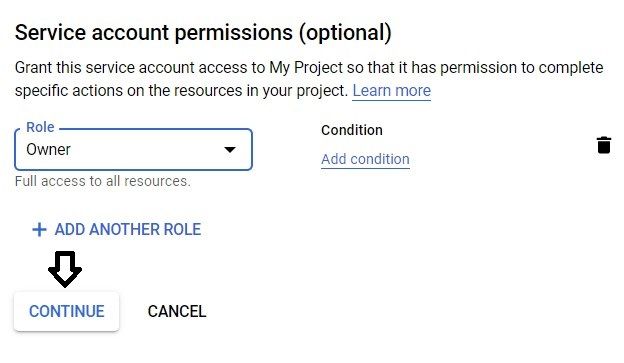

In the next page, select "Owner" as the role. This is needed to provide you full access to the Service Account. Then click "Continue".

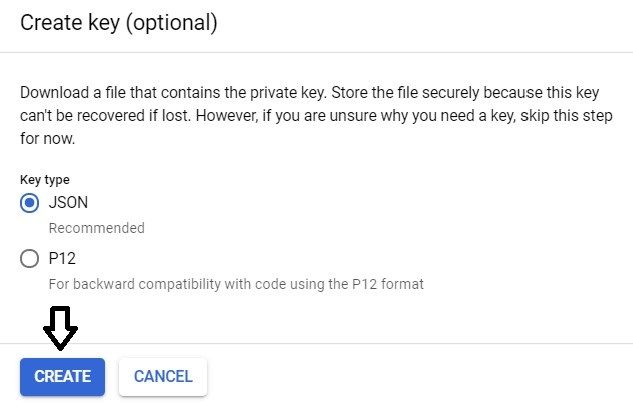

Next, click "Create Key" and then select "JSON". A token file will be downloaded to your computer. Save it as we will need it later and you can't download it again.

Keep note of the email address of the service account, we will need it later.

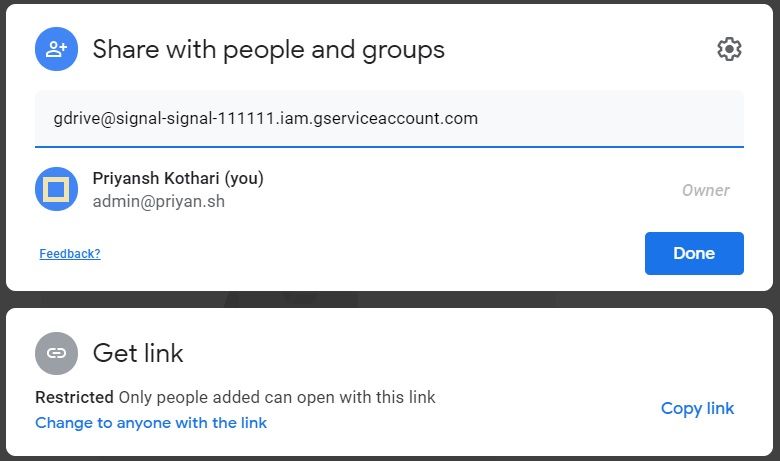

[email protected]

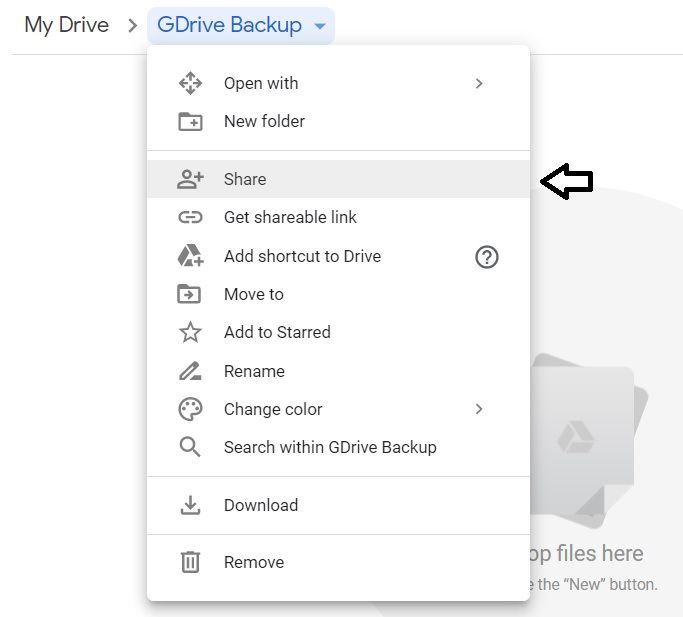

Now in your personal Google Drive, create a new folder and share it with the email you just noted.

It should look something like this. Click "Done" to save it. This is the folder where you will find your backups.

So, the Google Drive part is done, and now we will proceed to set up the script on our server. Keep the token file, the email and the link of the folder you just shared with you, we will need it in the next section.

Setting up the Server

First run the following commands to install all the required python packages.

sudo apt-get install python3-pip

pip3 install google-api-python-client

pip3 install requests pathlib httplib2==0.15.0What the script will do:

1. Backup the directory you choose to Google Drive.

2. Delete any old backups from your web server.

Now download the script from my GitHub repository and put your the email and the id of the folder from the folder link you noted down.

parentID = '1AFfxg_lpfIDbJf0IoH7XP4sZILqZ7Gen'

email = '[email protected]'

Now save this python script. Copy the token file to your server.

Its time to execute the script and check if it works. You need to choose the action, pass the token location and the directory you want to backup in the command:

sudo python3 gdrive.py backup [token location] [directory location]

So our command will look as follows to backup the "www" folder:

sudo python3 gdrive.py backup /var/token.json /var/www/

That's it! Now you will see that the backup is being archived, after which it will be uploaded. It will have the current date and time in its filename. The other command you can pass is "clean" to delete any old backups from your Google Drive service account. Here is an example:

sudo python3 gdrive.py clean /var/token.jsonNow, we need to automate this. I will set it to backup the files at every 1st day of the month. To do this, we will create a shell script to run with a cronjob.

Create a shell script "backup.sh" with the following content on your web server.

#! /bin/bash

cd /var/backup #Location of your python script.

/usr/bin/python3 gdrive.py backup /var/token.json /var/www/Provide the appropriate permission to the shell script to make it executable:

chmod +x backup.shNow add a new cron entry, by editing the crontab file:

crontab -eChoose "1" and add the following line, assuming your "backup.sh" is in the "var" folder:

0 0 1 * * /var/backup.shNow press "Ctrl+X" and type "Y" to save the file. It should now run every month to backup your files automatically.